VideoHandles: Editing 3D Object Compositions in Videos Using Video Generative Priors

TL;DR: VideoHandles is the first method for 3D object composition

editing in videos without any training. It achieves temporally consistent and context-aware

edits using a video generative prior.

Context-Aware 3D Edits in Videos

Input Video

Edited Video

Solid axes represnt the original 3D position, dotted axes the user-provided target position. VideoHandles

produces plausible edits, such as generating a new reflection for the wine glass and handling the disocclusion

of the lamp revealed behind the moved book pile.

Overview

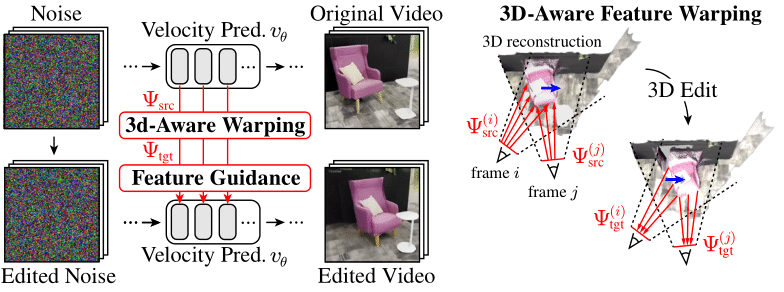

Through 3D reconstruction from the source video, we introduce a 3D-aware warping function to ensure

frame-consistent transformations. This function warps the intermediate features of a video generative

model, guiding the generative process to position the object at the target position, while also

maintaining the plausibility of effects like shadows and reflections.

More Results

VideoHandles with CogVideoX

Input

Output

VideoHandles is independent of the choice of video generative models. Applying VideoHandles to a more

recent advanced video generative model, CogVideoX, produces much sharper and more detailed outputs.

Long and Stylized Video Editing

Input

Output

Real-World Video Editing

Input

Output

Baseline Comparison

Click here for more comparison results.

Ablation Study

Click here for more ablation study results.

BibTeX

@inproceedings{Koo:2025VideoHandles,

title = {VideoHandles: Editing 3D Object Compositions in Videos Using Video Generative Priors},

author = {Koo, Juil and Guerrero, Paul and Huang, Chun-Hao Paul and Ceylan, Duygu and Sung, Minhyuk},

booktitle = {CVPR},

year = {2025}

}